The number of AI solutions in healthcare and specifically radiology, has increased strongly in the past years. However, the more options we are offered, the harder it is to understand their differences. How can radiologists make the best choice when evaluating which AI software to implement into their clinical practice?

When making the final decision, several factors will need to be considered including user-friendliness, integration into the radiology workflow, cost-effectiveness, and, most importantly, the quality of the AI algorithms.

However, the concept of AI quality is rather broad, has a lot of attributes to it, and it can be subjective to the specific task the AI algorithm is created to perform.

To help radiologists ask the right questions and compare solutions fairly, we will cover some of the important attributes that define the AI quality and explain which might be important to consider depending on the radiologist’s goal.

AI algorithm quality

The quality of an AI algorithm can be perceived as the assurance that an algorithm will correctly perform the task it was created to perform. In general, AI quality can be described by attributes such as reliability, conceptual soundness, performance metrics or data quality, the last two being the ones we will primarily focus on in this blog.

However, because of the impact AI in healthcare has on human lives, characteristics like privacy, fairness and transparency also become important when assessing these algorithms. Although important, we will not be focusing on these last characteristics as they can be more subjective and depend on different cultural, ethical, and regulatory standards.

Attributes and metrics that define AI quality

Model performance

When it comes to assessing the performance of an algorithm, professionals often focus on the concept of accuracy. However, it is essential to separate the general concept of accuracy from the specific metric that is used to evaluate performance. Since the terminology overlaps, people tend to focus on the accuracy metric too strongly when evaluating performance. Fortunately, there are a lot of other metrics that can be used to evaluate the performance of a model more extensively, depending on the use case.

Accuracy as a metric

Accuracy as a metric can generally be used to evaluate the performance of an algorithm. Basically, it gives you the ratio of cases in which the algorithm was correct.

However, depending on the use case, accuracy alone can be quite limited or even misleading when the dataset is not balanced. Consider, for example, an algorithm developed for the detection of cancer that is used to screen a normal population. Because the chances of having cancer are extremely low, an algorithm that mistakenly predicts no patient to have cancer can still have a rather high accuracy.

Sensitivity vs Specificity

Two important concepts to evaluate the performance of a healthcare AI algorithm are sensitivity and specificity.

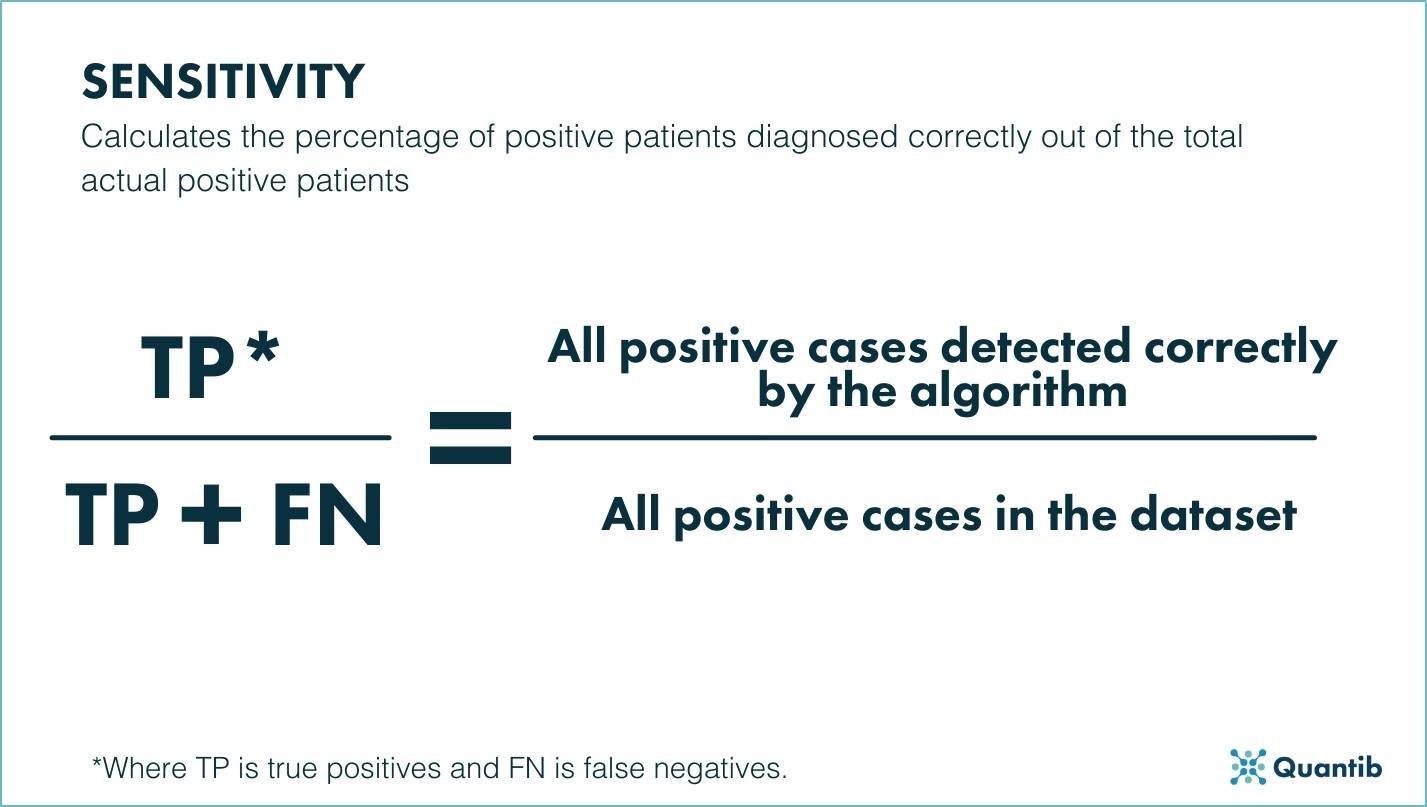

The sensitivity (also known as recall) metric gives the percentage of pmaybeositive patients diagnosed correctly out of the total actual positive patients. For example, in healthcare imaging, this metric shows if the AI algorithm can identify correctly which patients have a disease.

Figure 1. Schematic representation of the sensitivity metric.

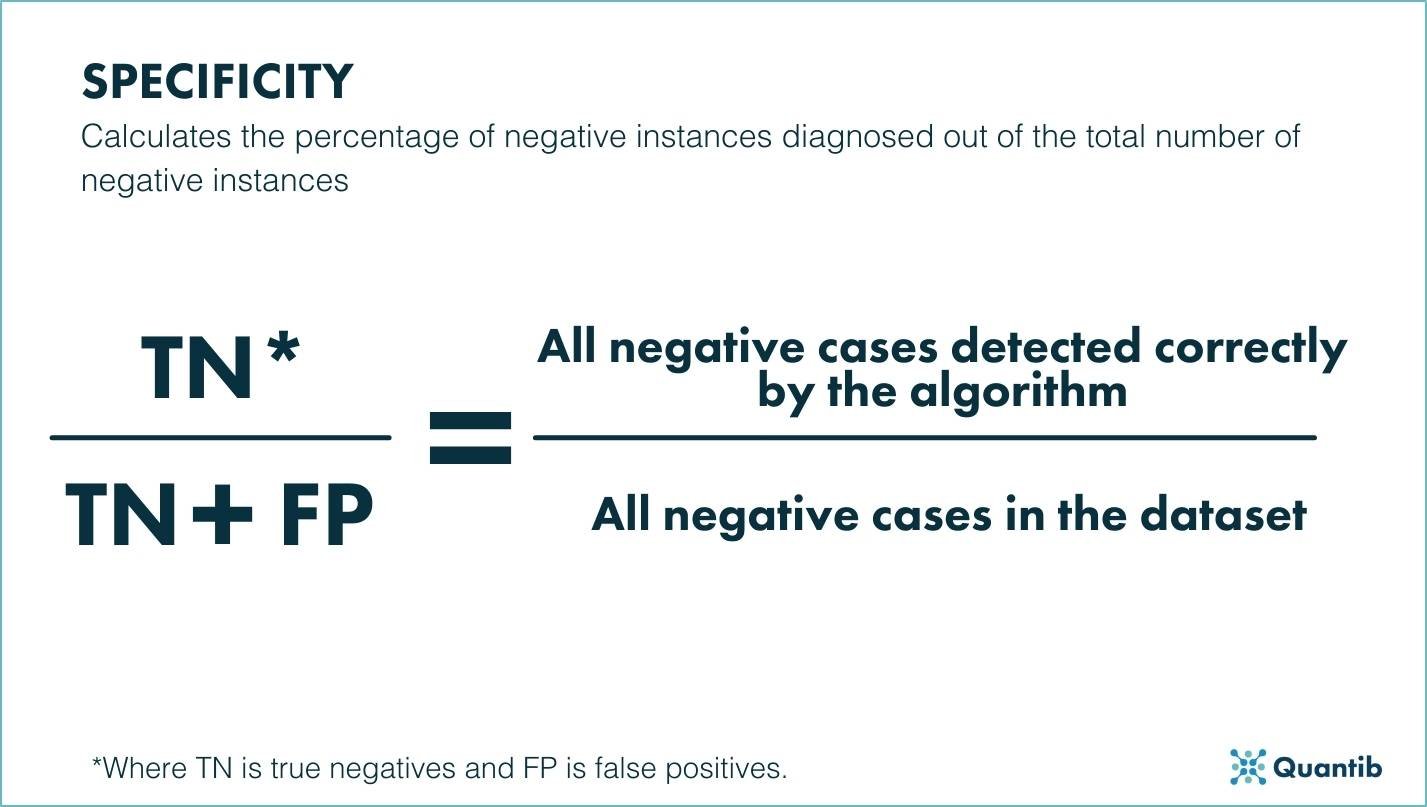

Specificity, on the other hand, calculates the percentage of negative instances diagnosed out of the total number of negative instances. In the previous example for lesion detection, this metric will allow radiologists and clinicians to understand if the AI algorithm can, in the case of prostate cancer, correctly differentiate between malignant prostate lesions and other regions of the prostate in the imaging examination.

Figure 2. Schematic representation of the specificity metric.

A good algorithm will be one that has both a high sensitivity, and a high specificity. However, in real life use cases there is typically a trade-off between these metrics as they are not fully compatible. For example, an algorithm that is 100% sensitive for lesion detection will most likely end up with a lot of false positives, because it has a low threshold for identifying suspicious regions.

It is essential to note that depending on the goal, some healthcare algorithms should be more specific, to avoid overtreatment, and some should be more sensitive to avoid overlooking possible findings. For example, an AI algorithm for cancer diagnostics that leans towards a higher sensitivity, would trigger overtreatment, which may in some cases do more harm than good. However, favoring specificity, would mean overlooking cases; also definitely something you want to avoid.

Overlap-based metrics and distance metrics

As previously mentioned, the task an AI algorithm should perform impacts the metrics that are relevant when evaluating said algorithm. For evaluating the quality of segmentation models, metrics such as the Dice similarity coefficient, the mean surface distance (MSD) or Hausdorff distance (HD) are interesting to look at.

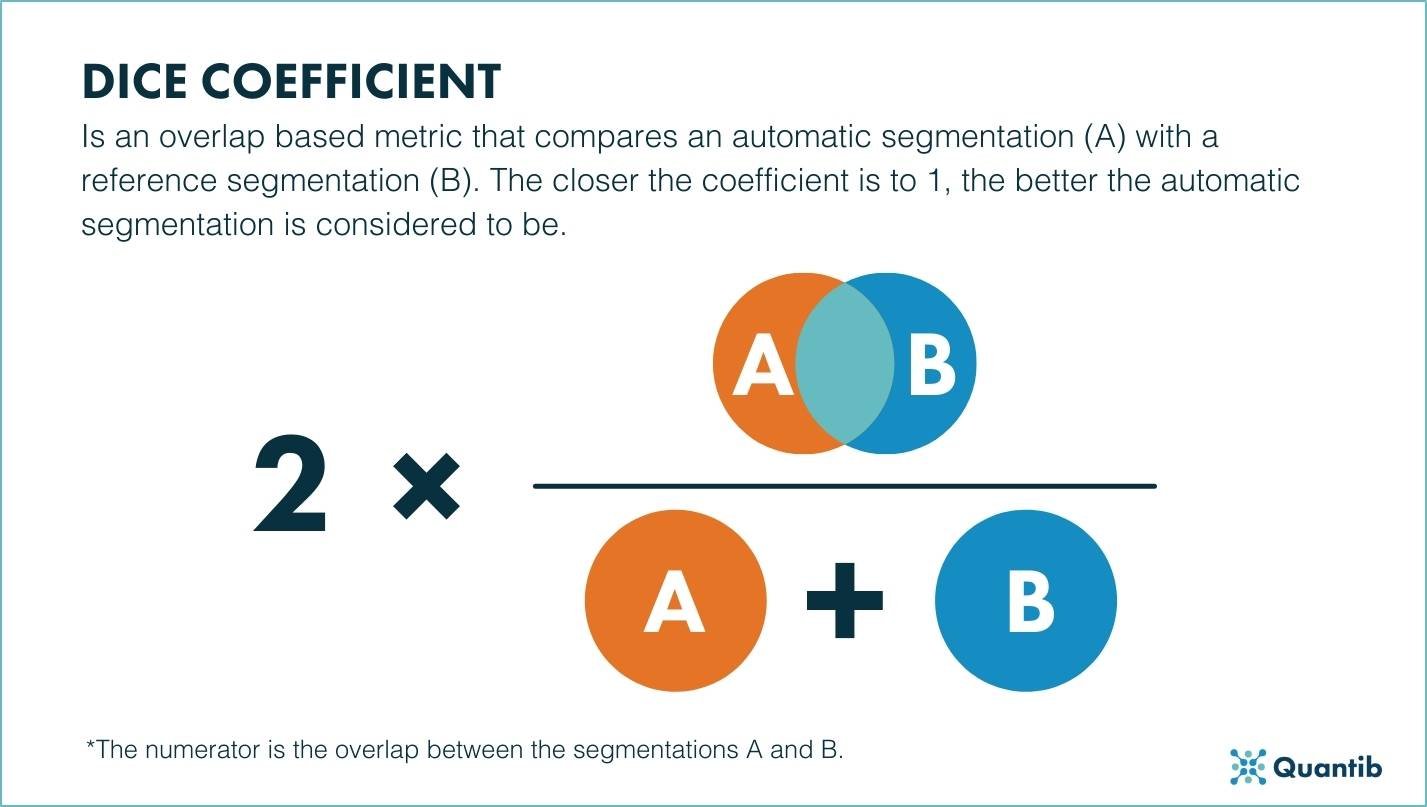

The Dice coefficient is an overlap-based metric that compares the automatic segmentation with a reference segmentation, usually a manually created ground truth. The closer the coefficient is to 1 the more overlap there is between the automatic and the manual segmentation, and the better the automatic segmentation is.

Figure 3. Schematic representation of Dice coefficient.

Although the Dice coefficient may look very informative, it is meaningless for large structures, as it does not specify how the boundaries overlap. In these cases, using the mean surface distance and the Hausdorff distance to evaluate an AI algorithm is a better way to go.

The mean surface distance measures the distance between the surface from the automatic segmentation and the reference segmentation. To calculate it, the mean distance between every voxel of the automatic segmentation and the closest voxel of the manual segmentation and vice versa are determined. The resulting distances are added together and then divided by two to get the final mean surface distance.

Similarly to the mean surface distance, the Hausdorff distance measures all distances from the points in the automatic segmentation to the same points in the reference segmentation. Its value will be equal to the maximum distance of those calculated. This metric is commonly used when evaluating the performance of an AI algorithm used for segmenting large structures.

Both the mean surface distance and the Hausdorff distance are good indicators of inconsistency between the boundaries of both segmentations, so the lower the distance, the more reliable the segmentation can be considered to be.

.jpg?width=1427&name=AI%20algortihm%20quality%20-%20images%20-%20HD%20(new).jpg)

Figure 4. Schematic representation of Hausdorff distance.

When calculating these overlap metrics for the entire AI algorithm, vendors normally compute all of them for each of the patients of the dataset the algorithm is trained on and then decide which is the best measurement to showcase the result, whether that is with an average, a median or simply using a box-whisker plot. We recommend the later as it shows the entire distribution of the data, including the mean value and outliers.

In cases of anatomic segmentations, these overlap and distance metrics are more interesting to consider than the trade-off between sensitivity and specificity, because detecting the organ is hardly ever the problem.

However, there might be times when some of these overlap metrics are more relevant than others. For example, when calculating the prostate volume as input to calculate PSA density, then using an algorithm with a high Dice coefficient might be enough. On the other hand, in cases that involve surgery, knowing the exact boundaries of an organ is essential so using an AI algorithm with low MSD and HD is better.

Quality of the data

When we talk about data quality, we refer to characteristics of the dataset used to develop the algorithm. To be considered a good algorithm, it has be trained on a dataset that is representative of the population it is going to be applied to, in order to meet accuracy standards. For example, when applied to radiology, that would be a dataset that contains scans obtained by different types of scanners, different parameters and configuration, different image characteristics and, perhaps most importantly, scans from an heterogenous population.

If an algorithm is trained on a dataset that mostly includes scans from a single manufacturer or from a specific age range, the results the algorithm provides might be biased. Therefore, the quality of the dataset used to develop the algorithm, has a direct impact on the clinical quality of the algorithm.

An algorithm is considered biased when it favors a specific type of input dataset or an answer that is erratic. Therefore, bias can have a big impact on how reliable the results provided by an algorithm are. To avoid it, it is important that vendors use a well distributed dataset for training and testing.

Do you want to know more? Check out different examples that cover the role of bias in healthcare AI.

As a radiologist, directly assessing the quality of the data used to train and test an AI algorithm is complicated and practically impossible. However, asking the vendor about the scope of their training and testing datasets, is a good way of making sure the used data meets the standard of quality you are looking for.

Other clinical performance validation of AI algorithms

Although it is not a direct requirement from the regulatory perspective, AI vendors in healthcare tend to use inter-observer variability to compare the performance of their AI algorithm to common clinical practice. Because of this, the performance of an AI algorithm should ideally be similar to, or better than inter-observer variability .

Therefore, when comparing different solutions, this is another thing radiologists might want to consider.

Both the experience of the radiologists and their personal preference play a big part when it comes to choosing one AI solution over another. Although experienced radiologists will benefit greatly from an algorithm that presents a good trade-off between sensitivity and specificity, they might want to overlook a stellar specificity in favor of a better sensitivity as they can easily dismiss false positives. On the other hand, AI software used for training may need to prioritize specificity over sensitivity as it might be harder for radiology residents to dismiss false positives than adding missed lesions.

Conclusion

To conclude, there is not one unique metric to look out for when assessing the quality of an AI algorithm. The best way to assess an algorithm depends on the type of algorithm, the goal, the clinical use case and the experience and personal preference of the radiologist.

At Quantib, we understand that these parameters change over time. We therefore believe it is essential to constantly implement customer feedback to make sure that our algorithms remain reliable and relevant for our actual and potential customers.